The uncertainty project seeks to develop new ways to calculate confidence limits by implementing geometric arithmetic on existing theory and re-deriving theory with the novel geometry-based format. This should be an improvement in understanding and utility as it dovetails with novel error arithmetic and alleviates limitations in current methods.

Uncertainty calculation essentially places an envelope, or confidence limits, around the scatter of N instances of a data sample to meet a specified probability. The traditional approach treats standard deviation as an independent error component transmitted from the scatter of each channel and this requires N>1. The scatter is also considered a set of deviations from a central datum, such as a mean value.

When a data set is not available, it can be generated by the Monte-Carlo method by first specifying a distribution and statistical outcomes for each channel, such as mean and standard deviation, and then secondarily using a random number generator. Limitations are that the statistics must be known and the channels or instance deviations are assumed independent. Another limitation is, to obtain stable results, the Monte-Carlo method requires a large random sample size, N. Under these conditions, the central limit theorem reveals that, although the random instances follow any distribution you specify, the mean follows a normal distribution. If the data channels have dependence and the sample size is finite, then the normal distribution is not guaranteed and its properties are not reliable. Although Monte-Carlo is easy to use, these limitations motivate the search for better methods that are quicker and require less specification.

The uncertainty project progressed from the formatting of each instance of each channel as a dual (scaled point and two-sided error vector, see the geometric arithmetic project), to adopting a generic but uniform datum and then formatting each instance as an oblique triangle geometry that spans the measurement and physical spaces. Using results from the Statistics Project, a sample is constructed by combining the instances of duals and performing a total of duals. This creates a right-triangle geometry for totals and standards spanning the measurement and physical spaces. The work also realizes the theoretical difference between errors and deviations.

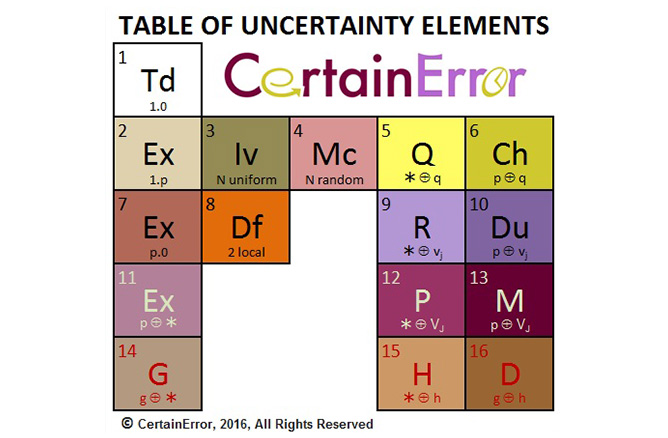

The influence of geometry gives a new picture of statistics and uncertainty. The table image shows the different uncertainty methods classified according to the type of geometry chosen. Some uncertainty elements are special cases obtained by nullifying a portion of a more general geometry. For example, at most levels, an exact method version (noted by Ex) is obtained by nullifying the error geometry by specifying zero scalars. This provides a direct way to validate uncertainty calculations by comparison of exact arithmetic (Ex) to traditional arithmetic (Td).

One result is a new theory that explains standardization scaling for both errors and deviations. The right-triangle results in a new derivation of the student-t sampling distribution using duals arithmetic, and confidence limits according to desired probability. The first benefit is a direct method to perform uncertainty analysis and eliminate the speed limitations of Monte-Carlo. A second benefit is the recasting of uncertainty without the burden of placing distribution assumptions on data and error. The uncertainty is built into every number and calculated simultaneously and seamlessly.